|

| Parallel Index Building Execution Plan |

SQL Server doesn't support parallel modifications to a b-tree index.

That might sound surprising. After all, you can certainly write to the same b-tree index from multiple sessions concurrently. For example, two sessions can happily write alternating odd and even numbers to the same integer b-tree index. So long as both sessions execute on different schedulers and take row locks, there will be no blocking and you'll get true concurrency.

No, what I mean is: A single session can't write to a b-tree index using more than one thread. No parallel plan modifications of a b-tree index, in other words. It's a bit like the lack of parallel backward ordered scans. There's no reason it couldn't be implemented, but it hasn't been so far.

You may have thought SQL Server would use a regular parallel scan to read the index source data, optionally sort it into index key order, then add those rows to the index in parallel. This would indeed work, even without sorting, but SQL Server just can't do it.

In case you're wondering, sorting into destination key order is an optimization. The resulting index would still be correct without it, but you'd be inserting rows essentially at random into a b-tree, with all the random I/O and page splitting that would entail.

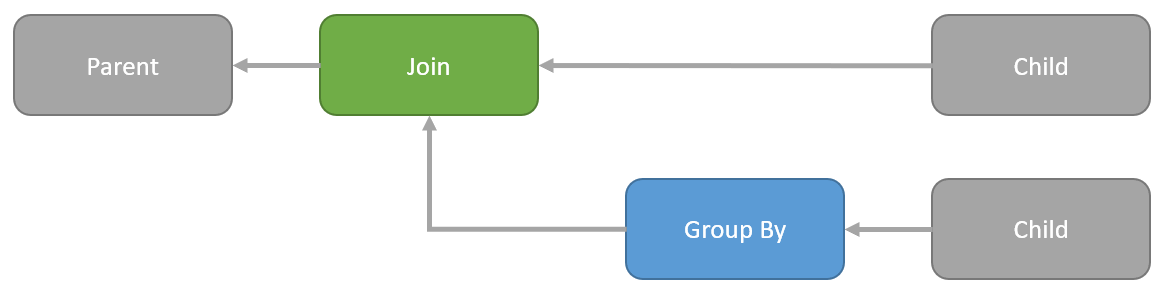

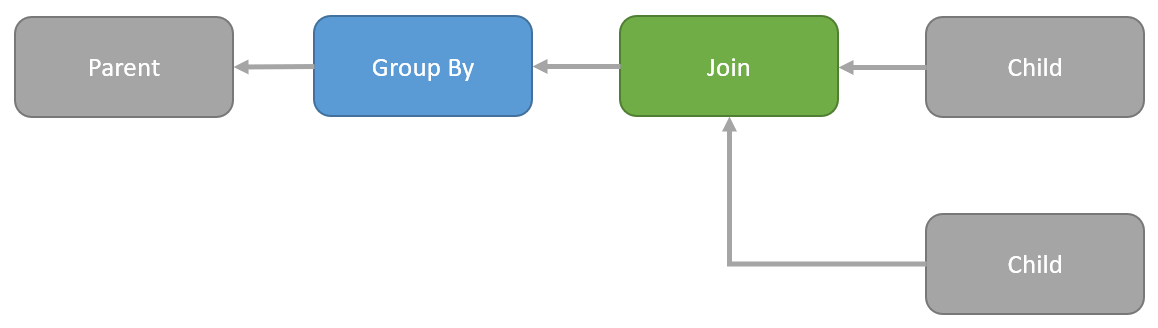

Ok, you say, but what about parallel index builds? They've been around for a long time in premium editions and certainly seem to modify a single b-tree in parallel. Yes, they do seem to, but SQL Server cheats.

— Paul White (@SQL_Kiwi) June 20, 2024