Closest Match with Sort Rewinds

In When Do SQL Server Sorts Rewind? I described how most sorts can only rewind when they contain at most one row. The exception is in-memory sorts, which can rewind at most 500 rows and 16KB of data.

These are certainly tight restrictions, but we can still make use of them on occasion.

To illustrate, I am going reuse a demo Itzik Ben-Gan provided in part one of his Closest Match series, specifically solution 2 (modified value range and indexing).

As Itzik’s title suggests, the task is to find the closest match for a value in one table in a second table.

As Itzik describes it:

The challenge is to match to each row from T1 the row from T2 where the absolute difference between T2.

valand T1.valis the lowest. In case of ties (multiple matching rows in T2), match the top row based onvalascending,keycolascending order.That is, the row with the lowest value in the

valcolumn, and if you still have ties, the row with the lowestkeycolvalue. The tiebreaker is used to guarantee determinism.

Setup

-- Itzik Ben-Gan's number generator

CREATE OR ALTER FUNCTION dbo.GetNums(@low AS BIGINT, @high AS BIGINT) RETURNS TABLE

AS

RETURN

WITH

L0 AS (SELECT c FROM (SELECT 1 UNION ALL SELECT 1) AS D(c)),

L1 AS (SELECT 1 AS c FROM L0 AS A CROSS JOIN L0 AS B),

L2 AS (SELECT 1 AS c FROM L1 AS A CROSS JOIN L1 AS B),

L3 AS (SELECT 1 AS c FROM L2 AS A CROSS JOIN L2 AS B),

L4 AS (SELECT 1 AS c FROM L3 AS A CROSS JOIN L3 AS B),

L5 AS (SELECT 1 AS c FROM L4 AS A CROSS JOIN L4 AS B),

Nums AS (SELECT ROW_NUMBER() OVER(ORDER BY (SELECT NULL)) AS rownum

FROM L5)

SELECT TOP(@high - @low + 1) @low + rownum - 1 AS n

FROM Nums

ORDER BY rownum;

GO

-- Test tables

CREATE TABLE dbo.T1

(

keycol INT NOT NULL IDENTITY

CONSTRAINT PK_T1 PRIMARY KEY,

val INT NOT NULL,

othercols BINARY(100) NOT NULL

CONSTRAINT DFT_T1_col1 DEFAULT(0xAA)

);

GO

CREATE TABLE dbo.T2

(

keycol INT NOT NULL IDENTITY

CONSTRAINT PK_T2 PRIMARY KEY,

val INT NOT NULL,

othercols BINARY(100) NOT NULL

CONSTRAINT DFT_T2_col1 DEFAULT(0xBB)

);-- Load test data with many duplicates

DECLARE

@numrowsT1 AS INT = 1000000,

@maxvalT1 AS INT = 1000,

@numrowsT2 AS INT = 1000000,

@maxvalT2 AS INT = 1000;

INSERT INTO dbo.T1 WITH(TABLOCK) (val)

SELECT ABS(CHECKSUM(NEWID())) % @maxvalT1 + 1 AS val

FROM dbo.GetNums(1, @numrowsT1) AS Nums

OPTION (QUERYTRACEON 2332);

INSERT INTO dbo.T2 WITH(TABLOCK) (val)

SELECT ABS(CHECKSUM(NEWID())) % @maxvalT2 + 1 AS val

FROM dbo.GetNums(1, @numrowsT2) AS Nums

OPTION (QUERYTRACEON 2332);The undocumented trace flag 2332 is there to achieve minimally-logged bulk INSERT…SELECT into the empty clustered table.

-- Nonclustered indexes

CREATE INDEX idx_val_key ON dbo.T1(val, keycol) INCLUDE(othercols);

CREATE INDEX idx_val_key ON dbo.T2(val, keycol) INCLUDE(othercols);

CREATE INDEX idx_valD_key ON dbo.T2(val DESC, keycol) INCLUDE(othercols);Test Query

Still reusing Itzik’s code, solution 2 is:

DECLARE

@1 integer, @2 integer, @3 varbinary(1),

@4 integer, @5 integer, @6 varbinary(1);

SELECT

@1 = T1.keycol,

@2 = T1.val,

@3 = SUBSTRING(T1.othercols, 1, 1),

@4 = A.keycol,

@5 = A.val,

@6 = SUBSTRING(A.othercols, 1, 1)

FROM dbo.T1

CROSS APPLY

( SELECT TOP (1) D.*

FROM ( SELECT TOP (1) *

FROM dbo.T2

WHERE T2.val < T1.val

ORDER BY T2.val DESC, T2.keycol

UNION ALL

SELECT TOP (1) *

FROM dbo.T2

WHERE T2.val >= T1.val

ORDER BY T2.val, T2.keycol ) AS D

ORDER BY ABS(D.val - T1.val), D.val, D.keycol ) AS A;The only change I have made is to consume the million-row output using variable assignment. If you prefer, you can ‘discard results’ after they’re received in SSMS instead. The important thing is not to let the display time dominate.

Performance Spool

The interesting thing about solution 2 is that table T1 contains a large number of duplicate val values.

There is an element of chance due to the NEWID calls in the setup script, but there will be very likely close to 1,000 distinct values (with 1,000 copies of each) in the million-row data set.

Even with optimal indexes, finding the closest match in T2 over and over again for the same T1 val is inefficient. This is a great opportunity for a performance spool.

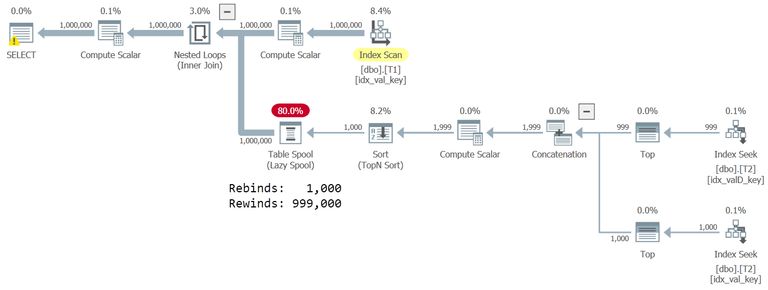

The execution plan for the test query is:

The optimizer adds a spool to the inner side of the nested loops apply. When the correlation value val does not change between loop iterations, SQL Server can reuse the previous result cached in the spool. Reading val in order from T1 using the index ensures duplicates are grouped together, maximizing the chance of a spool rewind (“cache hit”).

As shown in the annotation, the spool rebinds (“cache miss”) 1,000 times, and rewinds (“cache hit”) 999,000 times. The plan operators below the spool (Top N Sort etc.) only execute 1,000 times.

This plan executes in about 850ms on my SQL Server 2019 laptop.

No Performance Spool

We can disable the performance spool with documented trace flag 8690, or equivalently the new query hint NO_PERFORMANCE_SPOOL:

DECLARE

@1 integer, @2 integer, @3 varbinary(1),

@4 integer, @5 integer, @6 varbinary(1);

SELECT

@1 = T1.keycol, @2 = T1.val, @3 = SUBSTRING(T1.othercols, 1, 1),

@4 = A.keycol, @5 = A.val, @6 = SUBSTRING(A.othercols, 1, 1)

FROM dbo.T1

CROSS APPLY

( SELECT TOP (1) D.*

FROM ( SELECT TOP (1) *

FROM dbo.T2

WHERE T2.val < T1.val

ORDER BY T2.val DESC, T2.keycol

UNION ALL

SELECT TOP (1) *

FROM dbo.T2

WHERE T2.val >= T1.val

ORDER BY T2.val, T2.keycol ) AS D

ORDER BY ABS(D.val - T1.val), D.val, D.keycol ) AS A

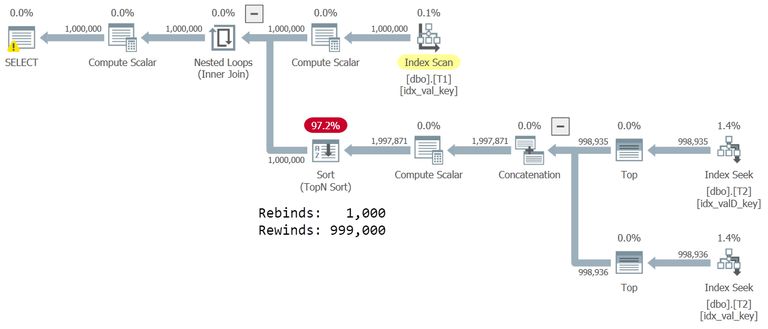

OPTION(QUERYTRACEON 8690);The execution plan is the same as before, just without the spool:

Performance is awful: 27,000ms.

The Sort Does Not Rewind (Much)

Notice the Sort reports 1,000 rebinds and 999,000 rewinds just like the spool did. Nevertheless, the operators below it all execute close to 1,000,000 times. They produce many more rows than they did in the performance spool plan.

This explains the dramatic performance impact. The Sort does not report rebinds and rewinds accurately in this case.

As explained in When Do SQL Server Sorts Rewind? this non-in-memory sort can only rewind when it contains a maximum of one row. For all but one value of T1.val, the two inner-side seeks will both find a row. The Concatenation operator combines these for a total of two rows, meaning the Sort cannot rewind.

If you look very carefully at the execution plan properties, you will find the operators below the Sort execute something like 998,936 times. The only time the Sort can rewind is when the uppermost Index Seek (T2.val < T1.val) does not find a row. The lower seek (T2.val >= T1.val) always finds a row.

For the lowest value of T1.val, the Sort receives one row, and so can rewind for all subsequent T1.val duplicates. This is a small win in the context of the whole query. The other 999 distinct values in T1 result in two rows entering the Sort, so no rewinds.

Achieving Sort Rewinds with a Rewrite

Recapping what we know:

- In-memory sorts can rewind up to 500 rows and 16KB

- Other sorts can rewind a maximum of one row

Let’s say this query is part of a bigger statement that cannot be broken up, and performance spools elsewhere in the execution plan are deeply suboptimal.

Can we disable performance spools and rewrite this part of the query so the Sorts can rewind?

Yes we can.

We can sort one row from each index seek separately, then use an in-memory sort of the resulting two rows.

Challenges

There are a couple of hurdles to overcome.

First, the optimizer will normally optimize away a sort of one row as redundant. Remember, we need a Sort operator for its caching ability, not to do any actual sorting.

One way to achieve this is to add a Top with a variable containing the value ‘1’ and an incompatible ORDER BY.

To illustrate, the code snippet for the ‘highest value below’ seek becomes:

DECLARE @T bigint = 1;

...

OUTER APPLY

(

-- 'Sort' one row (cache)

SELECT TOP (1) SQ1.*

FROM

(

-- Highest value below target

-- Hide Top (1) in a variable

SELECT TOP (@T) T2.*

FROM dbo.T2 AS T2

WHERE T2.val < T1.val

ORDER BY T2.val DESC, T2.keycol ASC

) AS SQ1

-- Different sort order

ORDER BY SQ1.val ASC, SQ1.keycol ASC

) AS CA1The code for the ‘lowest value above or equal’ seek is very similar, but uses CROSS APPLY because we are guaranteed to find a match.

The second part of the solution is to combine the values found from each seek below an in-memory sort. Recall from the linked article that an in-memory sort requires its inputs to be exclusively Constant Scan or Compute Scalar operators. We can achieve this with a VALUES clause:

...

CROSS APPLY

(

SELECT TOP (1) D.*

FROM

(

VALUES

-- Data from the index seeks

(CA1.keycol, CA1.val, CA1.othercols),

(CA2.keycol, CA2.val, CA2.othercols)

) AS D

ORDER BY ABS(D.val - T1.val) ASC, D.val ASC, D.keycol ASC

) AS AAs a final step, we will add an OPTIMIZE FOR hint to ensure the optimizer doesn’t see the literal value 1 for the variable @T even if a statement-level recompile occurs or OPTION (RECOMPILE) is used in future. (As a side note, assigning the result to variables prevents the Parameter Embedding Optimization (PEO), but that’s just a demo side-effect we shouldn’t rely on here).

Completed Rewrite

The finished code looks like this:

-- Bit buckets

DECLARE

@1 integer, @2 integer, @3 varbinary(1),

@4 integer, @5 integer, @6 varbinary(1);

-- Hidden Top (1)

DECLARE @T bigint = 1;

SELECT

@1 = T1.keycol,

@2 = T1.val,

@3 = SUBSTRING(T1.othercols, 1, 1),

@4 = A.keycol,

@5 = A.val,

@6 = SUBSTRING(A.othercols, 1, 1)

FROM dbo.T1

OUTER APPLY

(

-- 'Sort' one row

SELECT TOP (1) SQ1.*

FROM

(

-- Highest value below target

-- Hide Top (1) in a variable

SELECT TOP (@T) T2.*

FROM dbo.T2 AS T2

WHERE T2.val < T1.val

ORDER BY T2.val DESC, T2.keycol ASC

) AS SQ1

-- Different sort order

ORDER BY SQ1.val ASC, SQ1.keycol ASC

) AS CA1

CROSS APPLY

(

-- 'Sort' one row

SELECT TOP (1) SQ2.*

FROM

(

-- Lowest value above target

-- Hide Top (1) in a variable

SELECT TOP (@T) T2.*

FROM dbo.T2 AS T2

WHERE T2.val >= T1.val

ORDER BY T2.val ASC, T2.keycol ASC

) AS SQ2

-- Different sort order

ORDER BY SQ2.val ASC, SQ2.keycol DESC

) AS CA2

CROSS APPLY

(

-- In-memory sort

SELECT TOP (1) D.*

FROM

(

-- Constant Scan

VALUES

(CA1.keycol, CA1.val, CA1.othercols),

(CA2.keycol, CA2.val, CA2.othercols)

) AS D

-- Required order, with tie-breaker

ORDER BY

ABS(D.val - T1.val) ASC,

D.val ASC,

D.keycol ASC

) AS A

ORDER BY

T1.val ASC

OPTION

(

NO_PERFORMANCE_SPOOL,

OPTIMIZE FOR (@T UNKNOWN) -- Prevent PEO

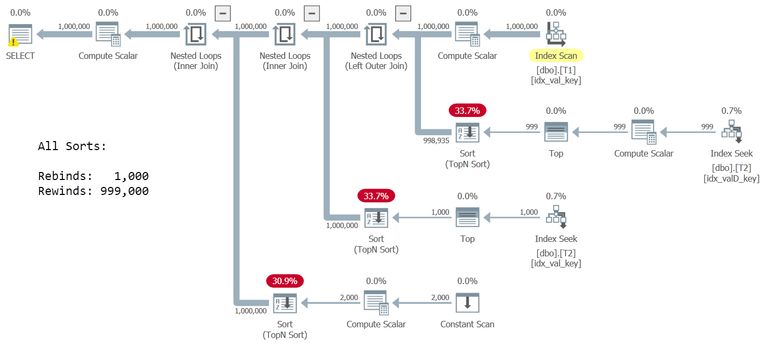

);The execution plan is:

The reported rewinds and rebinds for each of the three Sort operators is now accurate.

Each Sort is able to rewind optimally: The two non-in-memory sorts above the seeks receive a maximum of one row on each rebind. The third Sort receives a maximum of two rows from Constant Scan and Compute Scalar operators, and so is an in-memory sort.

Performance is very good at around 1900ms.

Performance summary

| Option | Time |

|---|---|

| Spool | 850ms |

| Sort Rewinds | 1900ms |

| No spool | 27,000ms |

This is not a bad result at all, considering there are three Sorts versus one Spool and a Sort. A sort is also intrinsically more expensive to create and reset than a spool.

It is still best to use a performance spool where possible, but just occasionally you might need to engineer a plan to cache and replay results in just one critical section.

This can also be done with e.g. a multi-statement function, but it is always nice to have an extra tool available.

Thanks for reading.