Glacially Slow SQL Server Resumable Index Operations

Intoduction

Resumable operations in SQL Server Enterprise Edition have several main advantages:

- The operation can be paused and resumed to fit within a maintenance window.

- Failures like a power outage, failover, or running out of disk space only cause the operation to pause. It can be resumed once the failure has been resolved.

- The transaction log can be backed up or truncated during the resumable operation.

Index rebuilds have been resumable since SQL Server 2017. Resumable index creation was added in 2019. Resumable PRIMARY KEY and UNIQUE CONSTRAINTs were added in 2022.

Online operations are appreciably slower than the offline equivalents. Resumable operations are an extension of online operations, so all resumable operations are also online.

The documentation claims there isn’t much of a performance difference between resumable and online operations:

Generally, there’s no performance difference between resumable and nonresumable online index rebuild. For create resumable index, there’s a constant overhead that causes a small performance difference between resumable and nonresumable index create. This difference is mostly noticeable only for smaller tables.

When you update a resumable index while an index operation is paused:

For read-mostly workloads, the performance effect is insignificant. For update-heavy workloads, you can experience some throughput degradation (our testing shows less than 10% degradation).

At a high level, resumable operations work by building execution plans that do the necessary work incrementally, persisting the partially processed index and recording metadata about progress so far at regular intervals (every 100,000 rows). Each increment is performed within a system transaction. User transactions are not allowed.

This approach allows frequent log truncation and means no more than 100,000 rows worth of work will be need to be redone if a failure occurs.

Scenario

There are many different ways one can employ resumable operations. One example mentioned in the documentation is creating or rebuilding a very large index when available log space is limited.

This takes advantage of points 2 and 3 listed in the introduction:

-

The transaction log can be truncated while the resumable operation is progress (point 3). If log backups can keep up with the rate of log generation caused by the resumable operation and all other concurrent activity, the whole operation can complete without ever pausing.

-

If the database does still run out of log space, the failure will only result in the operation being paused (point 2). It can be (manually) resumed from close to the point of failure once log space becomes available.

The first option (avoiding running out of log) is much to be preferred. The second option ought to work very nearly as well, but as of the time of writing (January 2025) it very much does not, as I will now describe.

Demo

To simulate a large database, I’m going to use the Stack Overflow 2013 sample. It isn’t a very large database, but it will do. I will be using SQL Server 2022 CU16 Developer Edition.

The Votes table contains 52,928,720 rows. It lacks a nonclustered index on the PostId column, so we will create one.

Preparation

Let’s start by allocating 8GB of log, setting the recovery model to FULL and taking a backup.

ALTER DATABASE StackOverflow2013

MODIFY FILE

(

NAME = StackOverflow2013_log,

SIZE = 8GB,

FILEGROWTH = 0KB

);

ALTER DATABASE StackOverflow2013

SET RECOVERY FULL;

BACKUP DATABASE StackOverflow2013 TO DISK = 'NUL';

BACKUP LOG StackOverflow2013 TO DISK = 'NUL';Offline

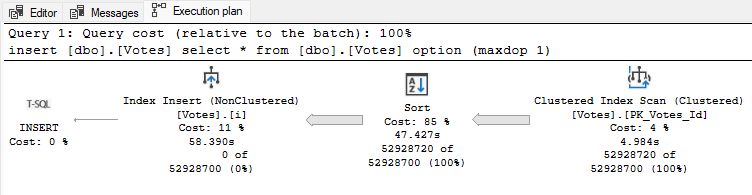

Now create our desired index offline.

CREATE NONCLUSTERED INDEX i

ON dbo.Votes (PostId)

WITH (ONLINE = OFF, MAXDOP = 1);This takes about one minute and uses about 10% of the 8GB log file.

Online

Let’s try that again as an online (but not resumable) operation.

DROP INDEX i ON dbo.Votes;

BACKUP LOG StackOverflow2013 TO DISK = 'NUL';

CREATE NONCLUSTERED INDEX i

ON dbo.Votes (PostId)

WITH (ONLINE = ON, RESUMABLE = OFF, MAXDOP = 1);That takes a bit longer (1m 38s) and uses 70% of the 8GB log file.

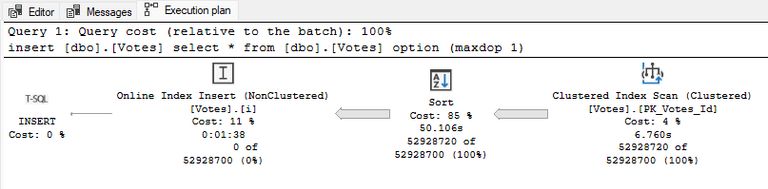

Resumable

Now let’s try the same thing as an online and resumable operation.

DROP INDEX i ON dbo.Votes;

BACKUP LOG StackOverflow2013 TO DISK = 'NUL';

CREATE NONCLUSTERED INDEX i

ON dbo.Votes (PostId)

WITH (ONLINE = ON, RESUMABLE = ON, MAXDOP = 1);Excitingly, this is a bit faster than the online test at 1m 18s, using 80% of the 8GB log.

Aside

The warning on the Sort operator normally indicates a spill, but this wasn’t a normal spill due to lack of memory grant.

The sort in a resumable index plan is a resumable sort, which uses external merge sort (writing sort runs to the user database) as part of its design. Making the operation start the sort again from scratch wouldn’t be very resumable, would it?

Broadly, resumable sort works by ‘spilling’ sort runs to the user database and recording persistent metadata about the operational state. Since the ‘spill’ must survive a restart or failover, tempdb cannot be used, hence SORT_IN_TEMPDB is not available for resumable sorts. The size of sort runs is smaller than the memory grant to reduce the amount of work that must be redone in case of a pause/failure and resume.

Reading unsorted input is also restartable due to the persisted metadata state. Overall, the entire process (not just the sort) is resumable with a minimal loss of work represented by the dynamic chunk size. A system transaction per chunk (metadata and side tables) ensures correctness, while allowing for early log truncation.

In this test, the different sort run sizes and I/O patterns of a resumable sort produce a performance benefit. This is just another example of many smaller sorts being more efficient than one large sort (m log n complexity). With a fast I/O subsystem, the algorithmic advantage can more than make up for the additional I/O cost. My test machine uses reasonably fast (3Gb/s) locally attached NVMe storage.

Resumable, log constrained

As we have seen, performing the build all in one go as an online or resumable operation required most of the 8GB log. Imagine our database was 1,000 times larger and everything scaled linearly. That means we would need most of an 8TB log to build an index on 53 billion rows. Remember, the limit on a single log file is 2TB.

Let’s say our imaginary very large database was limited to 512GB of log. Scaling that down 1,000 times means setting our log to 512MB.

BACKUP LOG StackOverflow2013 TO DISK = 'NUL';

DBCC SHRINKFILE (StackOverflow2013_log, 512);

-- May need a second go

BACKUP LOG StackOverflow2013 TO DISK = 'NUL';

DBCC SHRINKFILE (StackOverflow2013_log, 512);

-- Need to prevent growth from the new smaller size

ALTER DATABASE StackOverflow2013

MODIFY FILE

(

NAME = StackOverflow2013_log,

FILEGROWTH = 0KB

);With sufficient log backups

Running frequent log backups on a second connection, we can run the resumable index build just as we did before. As long as the backups can keep up with the log genration rate, the operation will complete successfully without ever pausing or resuming.

The index was built in the same time as before, but we never exceeded 512MB of used log space. Remember, the previous test required about 80% of an 8GB log file. This is a resumable operation showing its worth. Remember, an online operation does not allow log truncation until it completes.

On demand log backups

It may not be convenient or practical to back up the log often enough to prevent the resumable index operation from running out of space. I had to run a log backup to NUL every two seconds in the test above to achieve that outcome.

An alternative strategy is to run the index operation inside a TRY...CATCH block, performing a log backup whenever an out of log space error is encountered. Most DBAs will have written something like that for different purposes before. The script below implements that with some basic progress reporting added.

SET NOCOUNT, XACT_ABORT ON;

DROP INDEX IF EXISTS i ON dbo.Votes;

DECLARE @StartTime datetime2 = SYSUTCDATETIME();

DECLARE @ResumeTime datetime2 = @StartTime;

DECLARE @Elapsed integer;

DECLARE @TabID integer = OBJECT_ID(N'dbo.Votes', 'U');

DECLARE @SQL nvarchar(max) =

N'

CREATE NONCLUSTERED INDEX i

ON dbo.Votes (PostId)

WITH (ONLINE = ON, RESUMABLE = ON, MAXDOP = 1);

';

TryAgain:

BEGIN TRY

EXECUTE (@SQL);

SET @Elapsed = DATEDIFF(SECOND, @StartTime, SYSUTCDATETIME());

RAISERROR ('Operation completed successfully after %i seconds.', 0, 1, @Elapsed);

END TRY

BEGIN CATCH

-- Out of log space

IF ERROR_NUMBER() = 9002

BEGIN

DECLARE @PC integer =

(

SELECT TOP (1)

CONVERT(integer, FLOOR(R.percent_complete))

FROM sys.index_resumable_operations AS R

WHERE R.[object_id] = @TabID

AND R.[name] = N'i'

);

SET @Elapsed = DATEDIFF(SECOND, @ResumeTime, SYSUTCDATETIME());

RAISERROR

(

'Elapsed %i seconds. Log full at %i%% complete. Will backup log and resume...',

0, 1, @Elapsed, @PC

);

BACKUP LOG StackOverflow2013 TO DISK = 'NUL';

SET @SQL = N'ALTER INDEX i ON dbo.Votes RESUME;';

SET @ResumeTime = SYSUTCDATETIME();

GOTO TryAgain;

END;

;

-- Rethrow any other error. Index operation will be paused.

THROW;

END CATCH;You don’t have to use that script of course. You could just run the command manually, wait for the out of log space message, back up the log, and repeat until the task is done.

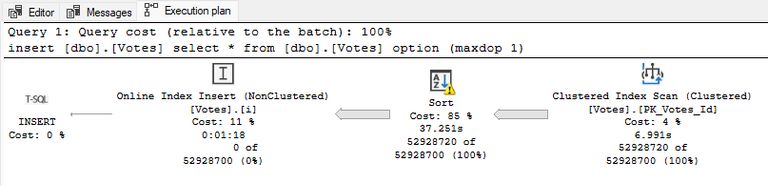

Glacial

You’ll need some patience though.

In total, the index build above ran for 3h 19m 10s.

Remember, the previous resumable tests completed in just over one minute.

Example output from the script above is shown below.

Elapsed 16 seconds. Log full at 28% complete. Will backup log and resume...

Processed 65233 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 65233 pages in 0.167 seconds (3051.693 MB/sec).

Elapsed 14 seconds. Log full at 50% complete. Will backup log and resume...

Processed 57176 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 57176 pages in 0.146 seconds (3059.503 MB/sec).

Elapsed 1601 seconds. Log full at 54% complete. Will backup log and resume...

Processed 57387 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 57387 pages in 0.146 seconds (3070.767 MB/sec).

Elapsed 644 seconds. Log full at 58% complete. Will backup log and resume...

Processed 57364 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 57364 pages in 0.146 seconds (3069.563 MB/sec).

Elapsed 213 seconds. Log full at 62% complete. Will backup log and resume...

Processed 57245 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 57245 pages in 0.145 seconds (3084.321 MB/sec).

Elapsed 1585 seconds. Log full at 66% complete. Will backup log and resume...

Processed 57356 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 57356 pages in 0.146 seconds (3069.135 MB/sec).

Elapsed 1113 seconds. Log full at 70% complete. Will backup log and resume...

Processed 57319 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 57319 pages in 0.146 seconds (3067.155 MB/sec).

Elapsed 1707 seconds. Log full at 74% complete. Will backup log and resume...

Processed 63479 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 63479 pages in 0.161 seconds (3080.308 MB/sec).

Elapsed 488 seconds. Log full at 79% complete. Will backup log and resume...

Processed 63488 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 63488 pages in 0.161 seconds (3080.745 MB/sec).

Elapsed 511 seconds. Log full at 83% complete. Will backup log and resume...

Processed 63429 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 63429 pages in 0.160 seconds (3097.119 MB/sec).

Elapsed 1424 seconds. Log full at 87% complete. Will backup log and resume...

Processed 63551 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 63551 pages in 0.161 seconds (3083.802 MB/sec).

Elapsed 470 seconds. Log full at 91% complete. Will backup log and resume...

Processed 57293 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 57293 pages in 0.145 seconds (3086.907 MB/sec).

Elapsed 1039 seconds. Log full at 95% complete. Will backup log and resume...

Processed 57359 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 57359 pages in 0.146 seconds (3069.269 MB/sec).

Elapsed 595 seconds. Log full at 99% complete. Will backup log and resume...

Processed 57336 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 57336 pages in 0.146 seconds (3068.038 MB/sec).

Operation completed successfully after 11950 seconds.Analysis

This is quite a shocking result.

For clarity, the task appeared busy throughout. It was always RUNNING with no waits to speak of. There was also no unusual latch or spinlock activity.

The process just burned CPU continuously for almost 3 hours 20 minutes.

Looking at the log output, we can see that it made good progress to start with. The first two blocks filled the 512MB log in about 15 seconds. The third block suddenly took 1,601s (almost 27 minutes) to fill the log.

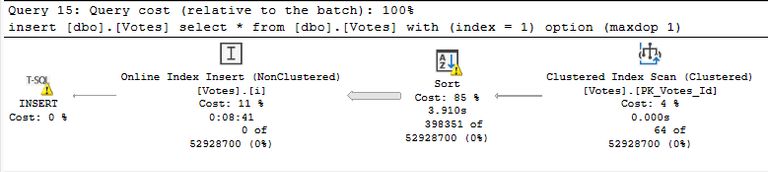

The execution plans are singularly unhelpful. Only the final one is easy to capture, since the preceding blocks of execution all fail with the out of log space error. Neverthless, I can tell you that looking at them live (using sys.dm_), they were mostly similar to the final one:

The plan shows 64 rows from the Clustered Index Scan and 398,351 rows from the Sort. It’s not unusual for special internal plans to produce unhelpful results like this, sadly.

The difference in performance between the first two intervals and the third is that the plan was initially reading from the Clustered Index Scan, persisting unsorted chunks, performing chunk-sized in-memory sorts, and writing sorted chunk runs to the user database. With fast I/O, this didn’t take all that long, but did generate significant log.

The third interval was the first one where the sorting operation was essentially complete, in that all sorted data was safely on disk in external runs ready to be merged into the final single sorted output stream.

Once fully sorted rows start arriving at the Online Index Insert operator, progress slows to an absolute crawl despite being constantly busy on the CPU.

Details

Analysis using the Windows Performance Recorder and Analyzer reveals that SQL Server spends almost all of its time within the Online Index Insert operator on calls to sqlmin! and sqlmin!.

Both these calls spend a great deal of time on a linear search of a large in-memory pointer structure. This happens for every row the Online Index Insert operator tries to insert.

There are no yield points in this code and no latches or spinlocks are taken. SQL Server is simply busy doing something wildly inefficient. None of this takes anywhere near long enough to worry the Resource Monitor, so nothing is recorded in the error log (non-yielding task, for example).

Nonetheless, the search does take long enough to slow each row insert to a crawl. Inserting 52,928,720 rows took 3h 19m 10s—less than 4,900 rows per second.

Fix

It turns out the problem only occurs if FastLoadContext is enabled. This facility enables minimally-logged inserts into non-empty b-tree indexes and has been on by default since SQL Server 2016. Before that, documented trace flag 610 was required. Remember that unlike an offline or online operation, a resumable index operation will end up inserting rows into a non-empty b-tree if it needs to resume after a pause or failure.

Now, this ought to work just fine as normal FastLoadContext inserts to non-empty b-trees do. The fact that it doesn’t is almost certainly a detailed implementation issue that only Microsoft can fix.

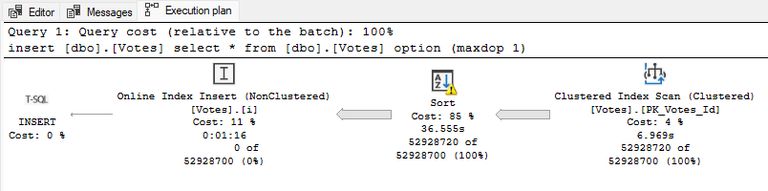

Meanwhile, we can use documented trace flag 692 to disable FastLoadContext during the resumable index operation. It works just fine for this purpose at session level using DBCC TRACEON (692).

Performance improvement

Running the previous test that ran for 3h 19m 10s with TF692 enabled produces the following log output:

DBCC execution completed. If DBCC printed error messages, contact your system administrator.

Elapsed 13 seconds. Log full at 23% complete. Will backup log and resume...

Processed 57417 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 57417 pages in 0.147 seconds (3051.472 MB/sec).

Elapsed 15 seconds. Log full at 48% complete. Will backup log and resume...

Processed 57329 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 57329 pages in 0.147 seconds (3046.821 MB/sec).

Elapsed 9 seconds. Log full at 53% complete. Will backup log and resume...

Processed 57336 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 57336 pages in 0.146 seconds (3068.038 MB/sec).

Elapsed 9 seconds. Log full at 56% complete. Will backup log and resume...

Processed 57357 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 57357 pages in 0.146 seconds (3069.162 MB/sec).

Elapsed 10 seconds. Log full at 60% complete. Will backup log and resume...

Processed 65457 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 65457 pages in 0.167 seconds (3062.172 MB/sec).

Elapsed 9 seconds. Log full at 64% complete. Will backup log and resume...

Processed 63464 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 63464 pages in 0.161 seconds (3079.556 MB/sec).

Elapsed 9 seconds. Log full at 68% complete. Will backup log and resume...

Processed 63493 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 63493 pages in 0.161 seconds (3080.963 MB/sec).

Elapsed 10 seconds. Log full at 72% complete. Will backup log and resume...

Processed 63491 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 63491 pages in 0.161 seconds (3080.866 MB/sec).

Elapsed 9 seconds. Log full at 76% complete. Will backup log and resume...

Processed 63489 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 63489 pages in 0.161 seconds (3080.769 MB/sec).

Elapsed 9 seconds. Log full at 80% complete. Will backup log and resume...

Processed 57330 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 57330 pages in 0.146 seconds (3067.717 MB/sec).

Elapsed 8 seconds. Log full at 83% complete. Will backup log and resume...

Processed 57333 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 57333 pages in 0.145 seconds (3089.062 MB/sec).

Elapsed 8 seconds. Log full at 87% complete. Will backup log and resume...

Processed 57330 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 57330 pages in 0.146 seconds (3067.717 MB/sec).

Elapsed 8 seconds. Log full at 90% complete. Will backup log and resume...

Processed 57329 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 57329 pages in 0.145 seconds (3088.846 MB/sec).

Elapsed 8 seconds. Log full at 94% complete. Will backup log and resume...

Processed 57330 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 57330 pages in 0.146 seconds (3067.744 MB/sec).

Elapsed 8 seconds. Log full at 97% complete. Will backup log and resume...

Processed 57326 pages for database 'StackOverflow2013', file 'StackOverflow2013_log' on file 1.

BACKUP LOG successfully processed 57326 pages in 0.145 seconds (3088.685 MB/sec).

Operation completed successfully after 150 seconds.

DBCC execution completed. If DBCC printed error messages, contact your system administrator.The index was fully built in only 2m 30s, despite the numerous log backups and pause/resume cycles.

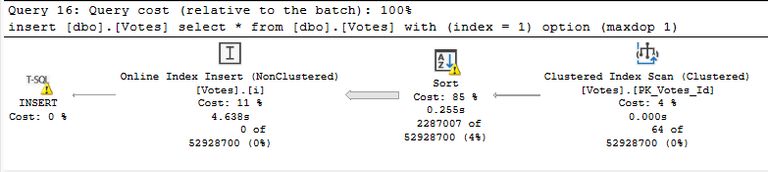

The execution plan for the final resume is still unhelpful, but you can at least see the much improved execution time:

Losing FastLoadContext is not so much of an issue since we are running under the FULL recovery model. One might argue Microsoft should simply disallow this optimization under full recovery for resumable operations.

Note: Index builds and rebuilds are fully logged under the full recovery model, but this isn’t as much of a problem as you might imagine.

Like SELECT INTO, these operations may not be minimally logged (only allocations logged, page images logged at commit time) but they are efficiently logged. There isn’t a separate log record for each row inserted to new pages; extents are bulk allocated and complete pages are logged in a single log record.

Final Thoughts

It wasn’t at all obvious to locate the underlying cause, since the in-memory structure subject to repeated linear search didn’t have a clear parent.

The problem doesn’t appear to manifest using normal PAUSE and RESUME operations. It might be that there is something special about running out of log space. Or, it might be that any unexpected error is enough. More testing is needed.

The same problem occurs with a resumable index rebuild, which does not feature a resumable sort.

The tests in this article all used serial execution for simplicity. The same issues occur with parallel resumable operations.

Until Microsoft fixes the underlying cause, consider enabling trace flag 692 for resumable index operations under the FULL recovery model. There’s no workaround for those cloud platforms where users cannot set trace flags.

Azure Feedback item to vote on: Disable FastLoadContext for Resumeable Operations under the FULL recovery model.

Thanks for reading.