Reducing NESTING TRANSACTION FULL Latch Contention

Each additional worker thread in a parallel execution plan executes inside a nested transaction associated with the single parent transaction.

Parallel worker access to shared parent transaction structures is protected by a latch. A NESTING_TRANSACTION_READONLY latch is used for a read-only transaction. A NESTING_TRANSACTION_FULL latch is used if the transaction has modified the database.

This design has its roots in SQL Server 7, where read-only query parallelism was introduced. SQL Server 2000 built on this with parallel index builds, which for the first time allowed multiple threads to cooperate to change a persistent database structure. Many improvements have followed since then, but the fundamental parent-child transaction design remains today.

Though lightweight, a latch can become a point of contention when requested sufficiently frequently in incompatible modes by many different threads. Some contention on shared resources is to be expected; it becomes a problem when latch waits start to affect CPU utilisation and throughput.

Latch convoys

One well-known problem in multithreaded code is the convoy.

Say we have a shared resource protected by a latch where each operation on the resource needs 2ms processing time while holding the latch in exclusive mode. This arrangement could handle a maximum of 1000/2 = 500 evenly-spaced requests per second before a queue starts to build up.

Once a queue (or ‘convoy’) has formed, each new request will have to wait 2ms for each request ahead of it in the queue before having its turn. The size of the queue will only start to decrease if the number of new requests per second falls below the maximum of 500.

In reality, the situation is worse than described because waiting for the latch is not free. Any thread requesting the latch but finding it in use will need to wait. In SQL Server, this means yielding the scheduler to another thread and sleeping until notified the latch is available. Once notified, the thread becomes runnable and, after a context switch, will get time on the scheduler to do its work.

The extra work involved in sleeping, being notified, waking up, and taking a context switch reduces the CPU time available for useful work while holding the latch. Throughput falls below the theoretical maximum of 500 due to these overheads, and the system can get into a state where more time is spent on administration than useful work.

Fairness is not always a virtue

A big contributor to the convoy problem is the concept of fairness. In this context, fairness means a thread passing the latch on to the longest waiting thread in the queue. In SQL Server, this means the thread releasing the latch checks the queue of waiters, passes latch ownership to the thread at the head of the queue, then signals it to resume from its wait.

This is an obviously equitable scheme, but it’s not ideal for overall system throughput. The signalled thread will need to wake up and context-switch onto the scheduler before doing useful work. This guarantees the system incurs overhead each time the latch is acquired when a queue of waiters exists.

It can be worse again if another thread is scheduled ahead of the signalled thread, but SQLOS takes steps to avoid this: A signalled thread is boosted to the head of the scheduler’s runnable queue up to 1000 times in a row, after which it goes to the end of the list.

Unfair latches

An alternative ‘unfair’ scheme doesn’t pass the latch on to the waiter at the head of the queue and signal it. Instead, the latch is simply released, and SQL Server uses an operating system call to signal one of the waiters. This will usually be the waiter at the head of the waitlist, but it’s explicitly not guaranteed:

If more than one thread is waiting on a mutex, a waiting thread is selected. Do not assume a first-in, first-out (FIFO) order. External events such as kernel-mode APCs can change the wait order.

You may be wondering what the advantage of this is. Remember, the latch is highly contended. This means requests are coming in all the time— it’s not limited to threads currently on the waiting list.

This is where the unfairness comes in: the signalled waiter may be usurped by a non-waiting thread which just happens to request the latch at an opportune moment. This is good news for throughput because it maximises the amount of useful work done under the latch. The alternative is for nothing useful to happen until the signalled thread completes its wake-up and context switch.

It’s unfair to the waiter, but the chances are good it’ll get its chance eventually. Starvation is a theoretical possibility, but in practice the quasi-random nature of events means the situation is unlikely to persist for long. Threads suffering from the unfairness may experience increased latency, but overall throughput is increased.

Nesting transaction latches

By default, the nesting transaction latches are fair. In modern builds of SQL Server, this doesn’t often present as a problem with the read-only NESTING_TRANSACTION_READONLY latch.

The NESTING_TRANSACTION_FULL latch can be a different story. This latch can be acquired and released extremely rapidly in data-changing execution plans. The latch is required when a parallel worker wants to add a log record to the current in-memory log block.

Parallel data changes

A NESTING_TRANSACTION_FULL latch is only required when a data-changing execution plan operator runs with parallelism. A serial data-changing (insert/update/delete/merge) operator never requires this latch because it operates directly on the parent transaction. Other operators in the plan might use parallelism, but they don’t need the latch because they don’t change data.

Before SQL Server 2014, the main point of contention for NESTING_TRANSACTION_FULL latches was in parallel index-building plans (added in SQL Server 2008). Each worker running the parallel index insert operator needs to acquire this latch before adding a log record.

SQL Server 2014 expanded the field by allowing parallel SELECT INTO. SQL Server 2016 extended this to parallel INSERT...SELECT for heaps (with some documented restrictions and others too).

In all of the above cases, latch contention can be significantly reduced (but not eliminated) by ensuring the data change is minimally-logged. This reduces the both the amount and frequency of logging, relieving pressure on the latch.

Unfair nesting latches

Many hot latches in SQL Server are already unfair, but the nesting transaction latches are not.

This changed in SQL Server 2019 CTP 2.5 when global trace flag 3933 was added to make these latches unfair. This trace flag is also effective in SQL Server 2022 CTP 2.1 where there are also changes to the related feature flag.

The trace flag is undocumented, so it seems likely the flag is only for use when recommended by Microsoft support staff.

Demo

To test the impact of unfair latches on a high-concurrency insert, create a database with at least 2GB of pre-allocated space evenly distributed across one file per CPU, and 2.5GB of pre-allocated transaction log. This will avoid file growths during the test.

I’m using SQL Server 2019 CU17 for the results I’ll show here.

The idea of this test is to rapidly generate log records using a parallel INSERT...SELECT statement. To make the log easy to clear, I’ll set the recovery model to SIMPLE and then FULL without backing up the database. This will leave the database in a state where full logging occurs but a checkpoint can still truncate the log.

The data load will still be a bulk load, it just won’t be minimally-logged. If you look at locks while the load is running, you’ll see BU bulk-update locks and various bulk operation locks on the HoBt and allocation unit.

The same contention issues I’ll describe here can show up with minimal logging enabled, but a greater volume of inserted data would be needed. I’m using full logging for these tests primarily as a convenience. Also, many real-world systems are unable to use a recovery model necessary for minimal logging.

Setup

A script to set up the environment and test tables follows:

ALTER DATABASE CURRENT SET RECOVERY SIMPLE;

ALTER DATABASE CURRENT SET RECOVERY FULL;

DROP TABLE IF EXISTS

dbo.TableName,

#BatchMe;

-- Test target

CREATE TABLE dbo.TableName

(

ID bigint NOT NULL,

TypeID integer NOT NULL,

DateTimeUTC datetime NOT NULL

);

-- Batch mode helper

CREATE TABLE #BatchMe

(

i integer NOT NULL,

INDEX CCS_BM CLUSTERED COLUMNSTORE

);Test reset

To reset everything before each test, run:

TRUNCATE TABLE dbo.TableName;

CHECKPOINT;

DBCC SQLPERF (LOGSPACE);Check the available log space before proceeding. You may need to issue the checkpoint more than once to maximize the reusable log space.

Test run

The test parallel inserts 64 * 1024 * 1024 rows into the target heap table using an efficient source of rows.

The latch, spinlock, and wait counter DMVs are reset so I can easily see how many of each type occurred during the test. I’m not interested in latches that could be acquired without waiting here.

DBCC SQLPERF ('sys.dm_os_latch_stats', CLEAR);

DBCC SQLPERF ('sys.dm_os_wait_stats', CLEAR);

DBCC SQLPERF ('sys.dm_os_spinlock_stats', CLEAR);

-- Record start time

DECLARE @Start datetime2(3) = SYSUTCDATETIME();

-- For maximum performance, run with actual

-- execution plan turned OFF

WITH

V32 (v) AS

(

SELECT 1 FROM (

VALUES

(1),(1),(1),(1),(1),(1),(1),(1),

(1),(1),(1),(1),(1),(1),(1),(1),

(1),(1),(1),(1),(1),(1),(1),(1),

(1),(1),(1),(1),(1),(1),(1),(1)

) AS V32 (v)

),

V1K (v) AS (SELECT 1 FROM V32, V32 AS B),

V1M (v) AS (SELECT 1 FROM V1K, V1K AS B),

V32M (v) AS (SELECT 1 FROM V32, V1M),

V64M (v) AS

(

SELECT 1 FROM V32M

UNION ALL

SELECT 1 FROM V32M

),

N (n) AS

(

SELECT ROW_NUMBER() OVER (ORDER BY @@SPID)

FROM V64M

LEFT JOIN #BatchMe ON 0 = 1

)

INSERT dbo.TableName

WITH (TABLOCK)

(ID, TypeID, DateTimeUTC)

SELECT

N.n,

TypeID = 2,

DateTimeUTC = DATEADD(

SECOND,

N.n,

CONVERT(datetime, '2020-01-01', 120))

FROM N

OPTION (MAXDOP 11);

-- Display duration

SELECT Elapsed =

DATEDIFF(MILLISECOND, @Start, SYSUTCDATETIME());

-- Latches

SELECT

LS.latch_class,

waits = LS.waiting_requests_count,

wait_time = LS.wait_time_ms,

max_time = LS.max_wait_time_ms

FROM sys.dm_os_latch_stats AS LS

WHERE

LS.waiting_requests_count > 0

ORDER BY

LS.wait_time_ms DESC;

-- Spinlocks

SELECT

SP.[name],

SP.collisions,

SP.spins,

SP.sleep_time,

SP.backoffs

FROM sys.dm_os_spinlock_stats AS SP;

-- Waits

SELECT

WS.wait_type,

waits = WS.waiting_tasks_count,

wait_time = WS.wait_time_ms,

max_time = WS.max_wait_time_ms,

signal_time = WS.signal_wait_time_ms

FROM sys.dm_os_wait_stats AS WS

ORDER BY

WS.waiting_tasks_count DESC;Parallelism and execution plan

My laptop has 12 hyperthreaded (six physical) cores.

I’m running the test at MAXDOP 11 so the additional parallel workers are assigned to 11 schedulers, leaving one free for the parent task. The parent task has nothing to do in this plan except retrieve packets from the final exchange operator (Gather Streams).

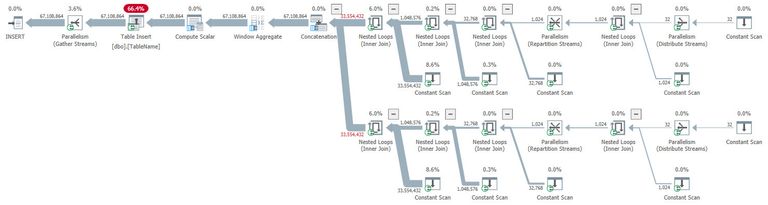

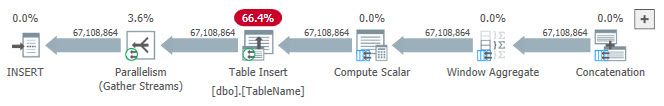

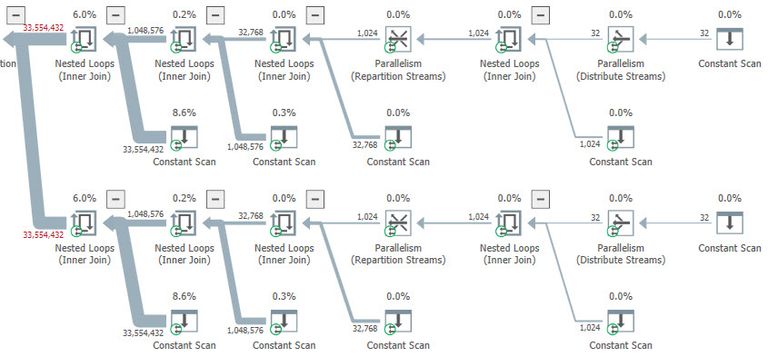

An example execution plan for the parallel insert:

Plan split into two parts for easier viewing:

The final Compute Scalar, Window Aggregate, and Concatenation operators run in batch mode (shown in Plan Explorer with blue highlights).

The row-production part of the plan generates 64 * 1024 * 1024 rows in less than a second when run separately. This will serve to drive the parallel insert to its limits.

Result with a fair latch

The data load completes in around 5.5 seconds with the following representative sample output (important elements only shown):

Latches

| latch_class | waits | wait_time | max_time |

|---|---|---|---|

NESTING_TRANSACTION_FULL |

271,609 | 22,642 | 22 |

Remember, this DMV shows only the times a thread had to wait because the latch was not available. The thread adds itself to the latch’s wait queue, then goes to sleep, waiting to be signalled.

The cumulative wait time of 22.6 seconds across the 11 parallel workers is pretty high, as is the sheer number of individual waits at over 270 thousand. This latch needs to be acquired and released every time a worker needs to add a log record to the log cache.

Spinlocks

| name | collisions | spins | sleep_time | backoffs |

|---|---|---|---|---|

X_PACKET_LIST |

3,349 | 56,635 | 0 | 8 |

ALLOC_CACHES_HASH |

173 | 2,148 | 0 | 3 |

LATCH_SPIN_STATS |

0 | 0 | 0 | 0 |

This output shows some minor contention on the allocation cache and a little more on the packet list inside exchanges (Parallelism operators).

Waits

| wait_type | waits | wait_time | max_time | signal_time |

|---|---|---|---|---|

LATCH_EX |

271,458 | 21,949 | 21 | 2,644 |

CXPACKET |

69,597 | 4,926 | 96 | 579 |

CXCONSUMER |

6,784 | 1,330 | 15 | 246 |

The LATCH_EX wait associated with the NESTING_TRANSACTION_FULL latch is dominant here, as expected.

There are also some waits on CXPACKET, accumulated when a worker thread finds no free exchange packet at the final exchange. The thread then has to wait for the parent task (running in execution context zero) to read some from the consumer side of the exchange and recycle the buffers.

The smaller number of CXCONSUMER waits occur when the parent task happens to find no packets ready to read. The single parent task can read packets from the exchange very quickly when it is running.

Timing and scheduling differences mean CXPACKET and CXCONSUMER waits can happen at different points during plan execution.

Result with an unfair latch

After enabling global trace flag 3933, the test completes in around 4.5 seconds (down from 5.5). A representative sample output and analysis follows:

Latches

| latch_class | waits | wait_time | max_time |

|---|---|---|---|

NESTING_TRANSACTION_FULL |

57 | 1 | 1 |

Latch waits have dropped to almost nothing from 271,609 with the fair latch. There’s no evidence of increased latency or latch starvation due to unfairness in this particular test. In fact, maximum latency has dropped from 22ms to just 1ms.

Spinlocks

| name | collisions | spins | sleep_time | backoffs |

|---|---|---|---|---|

LATCH_SPIN_STATS |

337,110 | 221,891,800 | 0 | 41 |

ALLOC_CACHES_HASH |

438 | 5,736 | 0 | 17 |

X_PACKET_LIST |

15,460 | 82,308 | 0 | 6 |

The LATCH_SPIN_STATS is an unusual DMV entry in a couple of ways. It’s not a spinlock per se—it accounts for spinning on latches in general.

Unlike all other wait, spinlock, and latch counters, this entry is maintained even if SQL Server is started with the -x option. This counter was all zeroes in the fair latch test, implying fair latches don’t spin to acquire the latch, they just wait.

Spins and back-offs

When a thread finds an unfair latch already held, it spins on the CPU briefly in case the latch becomes available very soon (as is generally expected). The lack of latch waits from this test is testimony to this being an effective strategy in this instance. The downside on this occasion is a large number of spins and a small number of back-offs.

Spinning involves running a progressively larger loop of CPU pause instructions, each of which introduces a tiny delay (tens of cycles), while reducing CPU power consumption or allowing any unblocked sibling hyperthread to make good use of shared core resources.

If a short cycle of spinning doesn’t acquire the latch, a back-off occurs, where the thread calls the Windows function Sleep or SwitchToThread.

In either case, the effect is to yield the remaining operating system time-slice to a waiting Windows thread, if any. Importantly, this call doesn’t release the SQLOS scheduler, so no other thread on the same scheduler will be run, and no SQLOS context-switch occurs. When the Windows call returns, the same SQL Server thread will continue execution on the current scheduler and try to acquire the latch again. Enough unsuccessful back-offs and the thread will wait.

The high number of spins shown here means this is a pretty hot latch, but threads were almost always able to acquire it after spinning, before a back-off or wait became necessary. Quite a lot of CPU time was ‘wasted’ spinning but it seems the alternative (waiting) would be worse, if the fair latch test is a guide.

Waits

| wait_type | waits | wait_time | max_time | signal_time |

|---|---|---|---|---|

CXPACKET |

280,905 | 19,310 | 155 | 2923 |

CXCONSUMER |

1,043 | 102 | 3 | 10 |

LATCH_EX |

56 | 3 | 0 | 1 |

TheLATCH_EX waits have dropped dramatically from 271,458 as expected.

CXPACKET has increased from 69,597 to 280,905. Increasing throughput at the parallel insert results in a higher fill-rate for packets on the producer side of the final exchange.

CXCONSUMER waits have dropped from 6,784 to 1,043. The parent task on the consumer side of the final exchange found no packets ready to process less often.

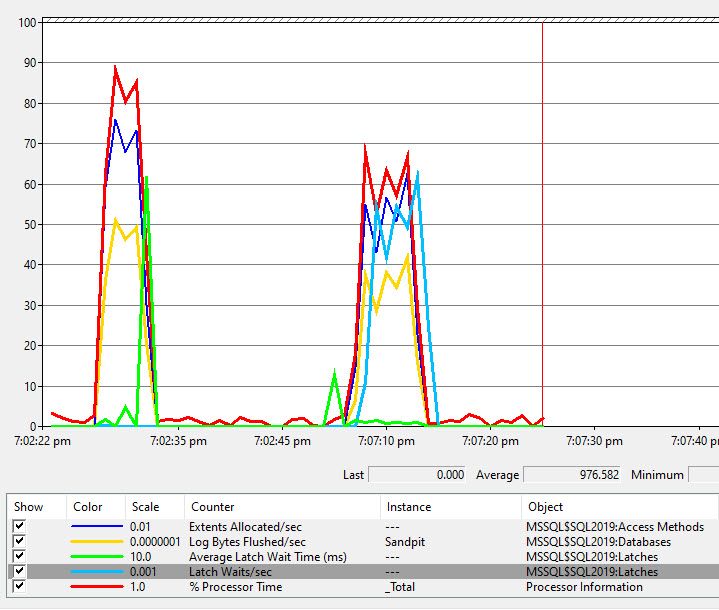

Performance monitor

The following shows a run for the unfair latch on the left and for the fair latch on the right:

The unfair latch shows better CPU utilization, more extents allocated per second, more log bytes flushed per second, and very few latch waits. There’s a brief spike in average latch wait time (green) at the end.

The fair latch shows a less efficient pattern in general, with very many short duration waits. Throughput and resource utilization are lower in this test when the fair latch is used.

Conclusion

The overall speedup in this test with the unfair latch, from 5.5 seconds elapsed to 4.5 seconds is worthwhile, but perhaps less dramatic than expected, given the large reduction in latch waits.

As so often, eliminating one bottleneck exposes another. The unfair latch is pretty hot in these tests. The final exchange also has limits due to its architecture and limited buffer size. Exchange limitations are increasingly evident at higher DOP (> 16 is really pushing it).

It’s a shame the exchange is necessary to start parallelism in this plan. Moving packets across the exchange serves no useful purpose—it’s necessary only to reuse the existing row-mode parallel architecture for parallel insert.

High-performance tests are very subject to precise local conditions and undocumented internal details. This test happened to show better throughput and resource usage for parallel insert when an unfair latch is used to protect the shared transaction descriptor.

Other tests with a different plan shape, operators, or data size and distribution might produce the reverse result. Microsoft have chosen not to make the parallel nested log latch unfair by default. This suggests large performance increases may be difficult to produce in practice, or that serious regressions are possible.

If you’re experiencing high contention on NESTING_TRANSACTION_FULL latches with SQL Server 2019 or later, you might want to contact Microsoft Support and ask if trace flag 3933 is right for you.