Sorting, Row Goals, and the TOP (100) Problem

Introduction

When you write a query to return the first few rows from a potential result set, you’ll often use the TOP clause.

To give a precise meaning to the TOP operation, it will normally be accompanied by an ORDER BY clause. Together, the TOP…ORDER BY construction can be used to precisely identify which top ‘n’ rows should be returned.

The ‘Top N’ Sort

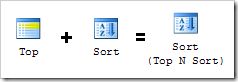

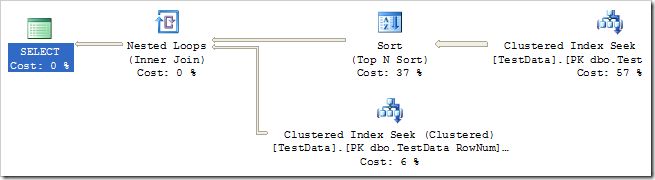

Thinking about how this requirement might be implemented in an executable query plan, we might expect to see a Sort iterator followed by a Top. In reality, the query optimizer can often collapse these two related operations into a single iterator, a Sort running in Top N Sort mode:

That is an idea you might find familiar if you read my previous post Row Goals and Grouping where we saw how a Sort followed by a Stream Aggregate can sometimes be collapsed into a Sort iterator running in Sort Distinct mode.

The General Sorting Algorithm

SQL Server’s normal sorting algorithms are suited to a wide range of ordering requirements. They work extremely well regardless of the data types involved, the size of data to be sorted, or the number of sort keys specified. They also make good use of available memory resources, and can spill to tempdb if required.

It is a common misconception that SQL Server will try to perform a sort entirely in memory if it can. In fact the algorithms used are more complex; they aim to achieve a balance between memory usage and response time, while maintaining high levels of resource concurrency. Memory is a precious resource in the server, so SQL Server may spill a sort to tempdb, even if sufficient main memory is available.

Sorting with a Row Goal

As sophisticated and highly-tuned as the main sorting algorithms are, they do assume that the full set of sorted rows is always required. When we use a TOP expression, the FAST n query hint, or even an EXISTS clause, we indicate that we would prefer a plan (or part of a plan) optimized to produce the first ‘n’ rows quickly.

Say we have a million-row table, and we just want the first ten rows (in some particular order). It seems wasteful to fully sort all one million rows when we know that a maximum of ten rows will ultimately be needed. SQL Server addresses this problem by providing a second algorithm, optimized to return a small number of rows quickly. This second method must still examine all candidate rows of course, but it knows only to keep the ‘n’ highest-ranked rows as it goes.

It is important to realize that the two sorting approaches are complementary. The second method only works well in a fairly narrow set of circumstances and is particularly unsuitable for large sorts since it cannot spill to tempdb. This alternative algorithm can only be used by a Sort iterator running in Top N Sort mode. Normal Sort iterators always use the default sorting method. To be clear, the Top N Sort iterator may use either algorithm.

Sadly, the query plan does not currently expose any information to identify which algorithm was used in a given query execution.

Achieving the Right Balance

As you might imagine, determining the optimal algorithm to use in a particular circumstance depends on some complex considerations. You might further imagine that SQL Server performs a number of fairly hairy calculations to determine the optimal choice, and those calculations might depend on heuristics as well as statistical information. No doubt, you may reason, many extremely clever people have given the best years of their careers to find a great way to make this important choice.

In fact, you would be quite wrong about that. SQL Server always uses the alternative algorithm where the top 100 (or fewer) rows are specified. In all other cases, the normal sorting routines are used. It really is as simple as that.

This heuristic often works well enough, but it is not at all difficult to engineer situations where it comes unstuck.

Coming Unstuck - 1

This first example is based on a question originally asked on the MSDN forums in December 2009.

At the end of this post, you will find links to the original forum thread (with important contributions from Brad Schulz among others) and subsequent blogs on the subject by Adam Haines and Fabiano Amorim.

As usual, we will need a test table and some sample data:

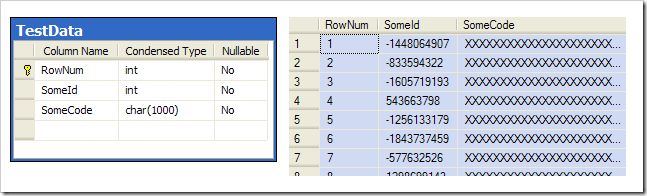

The table has a clustered primary key on the identity column RowNum, an integer SomeId column containing random numbers, and a char(1000) padding column named SomeCode.

Example code

Here is the code necessary to create the above table and populate it with 50,000 rows:

IF OBJECT_ID(N'dbo.TestData', N'U') IS NOT NULL

DROP TABLE dbo.TestData;

GO

CREATE TABLE dbo.TestData

(

RowNum integer IDENTITY NOT NULL,

SomeId integer NOT NULL,

SomeCode char(1000)

COLLATE LATIN1_GENERAL_CI_AS

NOT NULL

DEFAULT (REPLICATE('X', 1000)),

CONSTRAINT [PK dbo.TestData RowNum]

PRIMARY KEY CLUSTERED (RowNum)

);

GO

INSERT TOP (50000)

dbo.TestData

WITH (TABLOCKX)

(SomeId)

SELECT

SomeId = CHECKSUM(NEWID())

FROM master.sys.columns AS C1

CROSS JOIN master.sys.columns AS C2

CROSS JOIN master.sys.columns AS C3;

GO

UPDATE STATISTICS dbo.TestData

([PK dbo.TestData RowNum])

WITH FULLSCAN;

CREATE STATISTICS [stats dbo.TestData SomeId]

ON dbo.TestData (SomeId)

WITH FULLSCAN;

CREATE STATISTICS [stats dbo.TestData SomeCode]

ON dbo.TestData (SomeCode)

WITH FULLSCAN;Test query

We are asked to take a range of rows based on RowNum and return the first ‘n’ rows in SomeId sorted order.

We will run two tests, which are identical except one specifies TOP 100 and other TOP 101:

CHECKPOINT;

DBCC DROPCLEANBUFFERS;

DBCC FREEPROCCACHE;

GO

-- Test 1

DECLARE

@RowNum integer,

@SomeId integer,

@SomeCode char(1000);

SELECT TOP (100)

@RowNum = TD.RowNum,

@SomeId = TD.SomeId,

@SomeCode = TD.SomeCode

FROM dbo.TestData AS TD

WHERE

TD.RowNum < 30000

ORDER BY

TD.SomeId ASC

OPTION

(MAXDOP 1);CHECKPOINT;

DBCC DROPCLEANBUFFERS;

DBCC FREEPROCCACHE;

GO

-- Test 2

DECLARE

@RowNum integer,

@SomeId integer,

@SomeCode char(1000);

SELECT TOP (101)

@RowNum = TD.RowNum,

@SomeId = TD.SomeId,

@SomeCode = TD.SomeCode

FROM dbo.TestData TD

WHERE

TD.RowNum < 30000

ORDER BY

TD.SomeId ASC

OPTION

(MAXDOP 1);Execution plan and performance

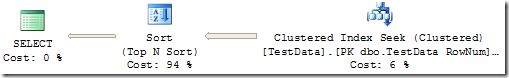

Both produce identical query plans, using a range seek to find the rows, and a Top N Sort to perform the sort:

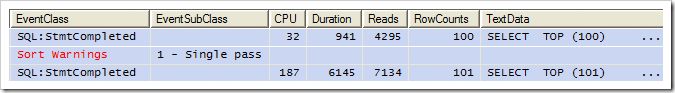

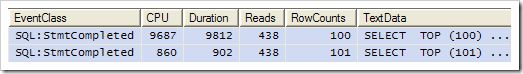

Despite this, we find that the two methods have very different performance:

In this particular test, the TOP (101) query is over six times slower than the TOP (100) version.

Analysis

One clear difference between the two runs is that the 101-row example spilled to tempdb (as indicated by the sort warning), whereas the TOP (100) version (using the alternative algorithm) did not. In fact, the TOP (101) example will always spill to tempdb with the specific sample data set, regardless of the amount of memory available to SQL Server.

This behaviour is by design. As I mentioned earlier, SQL Server does not always attempt to grant enough memory to a sort to ensure it occurs entirely in memory. The result is a compromise between the performance of one individual query, and the needs of the SQL Server instance as a whole.

The exact formula used to compute the memory grant for a sort operation is undocumented so the details may change at any time.

It is interesting that if we change the test to use a char(100) padding column instead of char(1000), the spill to tempdb does not occur, and the performance difference between TOP (100) and TOP (101) disappears.

Note for the demo under compatibility level 150

The sort in the demo no longer spills in SQL Server 2019 when compatibility level 150 is used. To see the spill use either of these hints:

USE HINT ('QUERY_OPTIMIZER_COMPATIBILITY_LEVEL_140')MAX_GRANT_PERCENT = 0

The general behaviour remains the same. TOP (100) never spills, but TOP (101) can. The original demo written in 2010 simply doesn’t reproduce that under compatibility level 150.

Compatibility level 150 makes a fix for the sort memory grant (originally released under trace flag 7470) the default behaviour.

Workarounds

Since it seems that spilling to tempdb is the problem, we might think of artificially modifying the query to fool the server into giving it a larger memory grant. This is usually a misguided approach in my opinion.

From the actual execution plan, we can see that the intermediate result set flowing into the Sort iterator is 29MB (30,000 rows of 1015 bytes each). Just twenty concurrent executions of that query would require 580MB of memory. If you enjoy debugging RESOURCE_SEMAPHORE waits and unpredictable query performance, larger memory grants might be the option for you 🙂

That aside, it turns out that SQL Server gives even the standard-algorithm query a memory grant of almost 11MB. This seems excessive considering the task at hand. We are only sorting on 30,000 integer SomeId column values, which ought to fit within 120KB or so. This implies that the normal sort algorithms require a memory grant at least as big as the entire data set (not just the sort keys) to avoid spilling to disk.

Adding Lookups

By excluding the large padding column from the sort, we can reduce the memory grant to just 2.75MB. We can modify our query to execute in two stages:

- Find the top ‘n’ rows sorted by

SomeId - Join back to the table to fetch the padding column values for just those rows using the primary key values from step 1

In the implementation below, I have used a common-table expression to find the 101 rows:

WITH

Top101 AS

(

-- Top 101 rows, excluding the padding

SELECT TOP (101)

TD.RowNum,

TD.SomeId

FROM dbo.TestData AS TD

WHERE

TD.RowNum < 30000

ORDER BY

TD.SomeId ASC

)

SELECT

Top101.RowNum,

Top101.SomeId,

TD2.SomeCode

FROM Top101

JOIN -- Fetch the padding column for just 101 rows

dbo.TestData AS TD2

ON TD2.RowNum = Top101.RowNum

ORDER BY

Top101.SomeId ASC

OPTION

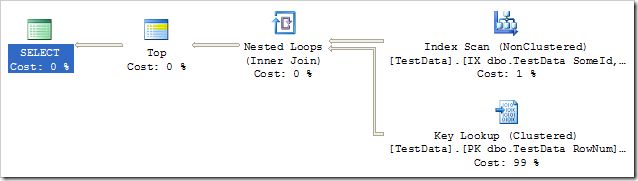

(MAXDOP 1);The query plan produced shows a Top N Sort of the key values, followed by a Nested Loops lookup to fetch the SomeCode column values:

There are many ways to rewrite the query following the same general idea e.g. using APPLY, a subquery, or a ranking function like ROW_NUMBER). Brad Schultz suggested one particularly compact approach (though with slightly different semantics that are not important in this case):

SELECT TOP (101)

TD.RowNum,

TD.SomeId,

(

SELECT

TD2.SomeCode

FROM dbo.TestData AS TD2

WHERE

TD2.RowNum = TD.RowNum

)

FROM dbo.TestData AS TD

WHERE

TD.RowNum < 30000

ORDER BY

TD.SomeId ASC

OPTION

(MAXDOP 1);Avoiding the Sort

A further solution is to create an index to avoid the sort altogether:

CREATE UNIQUE INDEX [IX dbo.TestData SomeId, RowNum]

ON dbo.TestData (SomeId, RowNum);This is a very compact index—just 4 bytes (plus overhead) to store each SomeId value. The original TOP (101) query now produces this sort-free plan:

Rows are acquired from the index in SomeId order, and the RowNum predicate is applied as a residual. The first 101 rows that match result in a Key Lookup to fetch the padding column, and we’re done.

Each of these solutions has its advantages and disadvantages, so each might be considered ‘best’ depending on the wider circumstances. For example, the index-based query is the only version which does not require a memory grant. If the design goal is predictable (rather than absolute best) performance, only the last version can guarantee that it will not wait on a memory grant.

Coming Unstuck – 2

It seems only fair to present a case where TOP (100) performs significantly worse than TOP (101). It turns out that the alternative sort algorithm’s worst case performance occurs with long similar sort keys presented in exactly reversed order. To demonstrate this, we’ll use the char(100) version of the test rig:

CREATE TABLE dbo.TestData

(

RowNum integer IDENTITY NOT NULL,

SomeId integer NOT NULL,

SomeCode char(100)

COLLATE LATIN1_GENERAL_CI_AS

NOT NULL

DEFAULT (REPLICATE('X', 100)),

CONSTRAINT [PK dbo.TestData RowNum]

PRIMARY KEY CLUSTERED (RowNum)

);The test queries are modified to reflect the change to char(100), and to sort by SomeCode ascending, and then by RowNum descending. This provides the long keys needed, and ordering by RowNum descending helps ensure that the rows arrive at the sort in exactly reversed order:

SELECT TOP (100)

@RowNum = TD.RowNum,

@SomeId = TD.SomeId,

@SomeCode = TD.SomeCode

FROM dbo.TestData AS TD

WHERE

TD.RowNum < 30000

ORDER BY

TD.SomeCode ASC,

TD.RowNum DESC

OPTION

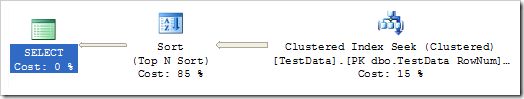

(MAXDOP 1);Again, both queries produce exactly the same query plan:

But the Profiler results are very different from last time:

This time, the TOP (100) query is more than ten times slower than TOP (101).

As an aside, note that the cost of key comparisons is crucial. I deliberately chose a compound key that differs only in the final few bytes and which requires comparison using expensive Windows collation rules.

Summary

SQL Server provides a secondary sort algorithm used by the Top N Sort iterator when 100 or fewer rows are requested.

This alternate algorithm uses less memory than a full sort, but can perform poorly if the sort keys are large and similar, and the keys happen to be presented to the Sort iterator in reverse order. The nature of the algorithm makes it unsuitable for larger or more general sorting needs.

Absolute best sort performance is obtained when the sort can be performed entirely in main memory, regardless of which algorithm is used. However, large sorts (or many concurrent smaller sorts) can quickly consume all of a SQL Server’s available query execution memory.

System designers may choose to create indexes to avoid some sorting operations altogether. They may also choose to reduce the average row size of intermediate result sets that pass through a Sort iterator to minimize memory grant requirements.

This may require careful query design, and may incur extra logical reads and higher CPU utilization on average. For many systems, good, predictable performance with high concurrency will be preferred over a wide range of best-case/worst-case execution times, and relatively poor resource concurrency.

Related Reading

- The original MSDN thread (archive link)

- Blog entry by Adam Haines

- Blog entry by Fabiano Amorim

- A related Connect Item (archive link)

- Books Online: Top N Sort Iterator