Row Goals and Grouping

Introduction

You might recall from Inside the Optimizer: Row Goals In Depth that query plans containing a row goal tend to favour nested loops or sort-free merge join over hashing.

This is because a hash join has to fully process its build input (to populate its hash table) before it can start probing for matches on its other input. Hash join therefore has a high start-up cost, balanced by a lower per-row cost once probing begins.

In this post, we will take a look at how row goals affect grouping operations.

Grouping Strategies

While the start-up cost of hash join often makes it unsuitable for plans with a row goal, there are times when hashing operations may feature in such plans since the Hash Match iterator also supports a streaming mode.

As an example, say we are asked to list one hundred unique first names from the AdventureWorks Contacts table:

SELECT TOP (100)

FirstName

FROM Person.Contact

GROUP BY

FirstName;

-- Or, equivalently

SELECT DISTINCT TOP (100)

FirstName

FROM Person.Contact;The optimizer has two strategies to choose from here.

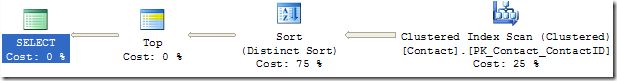

The first is to sort the records by FirstName, then return one row from each group. Conceptually, this involves a Sort followed by a Stream Aggregate. In practice, SQL Server collapses the whole operation into a single Sort iterator, running in Distinct Sort mode:

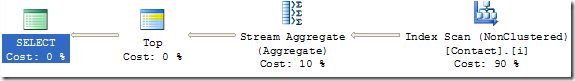

If rows already sorted by FirstName are available (perhaps from an index or previous sorting operation earlier in the plan), only the Stream Aggregate part is required:

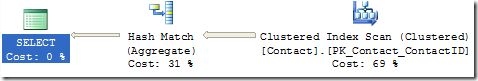

The second option is to hash the FirstName values, only storing a value if it has not already been encountered. Once all input rows have been consumed, we can scan the (unique) stored values to produce the required output:

The cheapest option is the Stream Aggregate, though it does require that pre-sorted rows are available.

Otherwise, the optimizer generally prefers hashing, especially for larger input sets with fewer groups, and where no particular output order is specified.

Sort Distinct is often the highest-cost option, unless a sorted output is required.

Blocking and Streaming

The Sort Distinct and Hash Match Aggregate iterators in the plans above are both blocking. They only start to produce output after consuming their entire input, and both require a memory grant at runtime.

The Sort iterator is blocking because it cannot determine which item sorts highest without examining all records, and it requires a memory grant to cache the rows it receives.

The Hash Match iterator requires a memory grant for its hash table.

The Stream Aggregate, as the name suggests, is a streaming (non-blocking) iterator. It consumes just as many rows from its child input as are necessary to produce the next distinct row.

Effect of a row goal

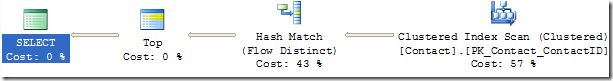

Where a row goal is specified, there is another implementation option. Unlike a hash join, a Hash Match Aggregate can also operate in a streaming mode, returning a new value for FirstName every time it encounters a value that it has not seen before. The use of a hash table makes it very cheap to check if the current value has been seen already.

When running in this streaming mode, the Hash Match iterator shows that it is performing a Flow Distinct physical operation:

This is the plan produced by the optimizer for our example query. There are no fully blocking operators, though the Hash Match does still require a small memory grant to ‘remember’ the values it has already seen.

Choosing flow distinct

The query plan chosen by the optimizer is a cost-based decision, based in part on statistical information. In general, the optimizer chooses a Flow Distinct where it determines that fewer output rows are required than there are distinct values in the input set.

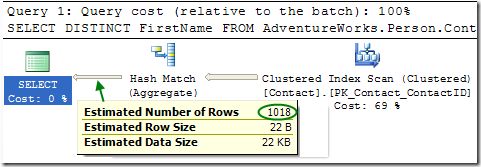

To see how many distinct values (and hence how many groups) the optimizer expects, we can examine the estimated execution plan for the same query without the TOP clause:

This shows that the optimizer expects 1018 unique values in the FirstName column. The optimizer will choose a Flow Distinct implementation if the row goal is 1017 or less. You can experiment with different TOP or OPTION (FAST n) values to verify that is the case.

To see how the optimizer ‘knows’ that 1018 groups exist, let’s look at the statistical information for the FirstName column:

DBCC SHOW_STATISTICS

(

[Person.Contact],

[FirstName]

)

WITH

STAT_HEADER,

DENSITY_VECTOR,

HISTOGRAM;Partial output:

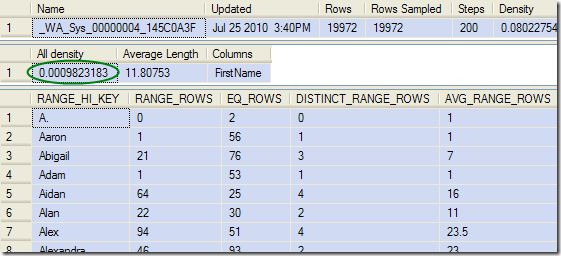

The DISTINCT_RANGE_ROWS column shows how many distinct values are contained in each step of the histogram, excluding the RANGE_HI_KEY values. Adding all the DISTINCT_RANGE_ROWS values together results in a total of 818, to which we add the 200 unique RANGE_HI_KEY values, producing a total number of expected unique values of 1018, as was shown in the estimated plan.

This information is also available directly from the all density value circled in green. ‘All density’ is an estimate of 1/(number of distinct values), so taking the reciprocal of 0.0009823183 also gives the expected number of unique values.

The full calculation performed inside the optimizer takes into account the sampled number of rows compared to the current table cardinality (which might have changed since the statistics were recorded). In this case, the 19,972 rows sampled by the statistics exactly matches the current table cardinality, so no adjustment was necessary.

That’s all for today, next time I’ll take a look at row goals with sorting operations.